In this article, you’ll learn everything you need to know about Large Language Models (LLMs) in a practical and simple way. You’ll find out what exactly they are, how they work inside, and what their most important applications are in 2025. You will also learn about the risks you should take into account and how to choose the most suitable model for your needs.

If you’ve ever wondered how ChatGPT, Claude, or Gemini can understand and generate text almost like a human, here are the answers. We will show you everything from the basics to the most advanced trends, always with practical examples that you can apply.

Key points

LLMs represent a technological revolution that is transforming the way we interact with machines and process information. Here are the essential points you should know:

• LLMs are language-specialized AI models that use Transformer architectures to process trillions of parameters and generate human-like text.

• Its training requires massive resources: GPT-3 consumed 1,287 MWh of electricity and produced more than 500 tons of CO2, equivalent to 600 transatlantic flights.

• Practical applications include content generation, multilingual translation, code conversion, and virtual assistants that save 30-90% of the time on routine tasks.

• The main risks are hallucinations (2.5-15% of false answers), biases inherited from training data, and privacy and security vulnerabilities.

• The future points towards more efficient multimodal models, contexts of up to 2 million tokens, and responsible regulation such as the new European AI law.

Understanding these capabilities and limitations will allow you to harness the potential of LLMs as you responsibly navigate this ever-evolving technological landscape.

What is an LLM or ‘Large Language Model’?

Imagine a system that can read millions of books, articles, and web pages in a matter of days, and then use all that knowledge to hold conversations, write texts, or solve complex problems. That’s exactly what a Large Language Model does.

An LLM is a type of artificial intelligence specialized in understanding and generating human language. Unlike traditional programs that follow specific rules, these models learn language patterns by analyzing huge amounts of text. The result is amazing: they can write like people, translate languages, code code, or answer questions on virtually any topic.

Why are they so important now? Because they have reached a level of sophistication that allows them to perform tasks that previously could only be done by humans. ChatGPT, Claude, Gemini… all of these names you hear constantly are examples of LLMs that are changing the way we work, study, and communicate.

What is an LLM or ‘Large Language Model’?

Technical definition of LLM

A Large Language Model (LLM) is an artificial intelligence system trained on huge amounts of text that can understand and generate human language in a surprisingly natural way. Think of it as a program that has “read” millions of books, articles, and web pages, and can now hold conversations, write texts, and answer questions consistently.

The technical basis of these models is transformers, an architecture of neural networks that functions as a sophisticated system of attention. Imagine you’re reading a sentence: transformers can “look” at all the words at the same time and understand how they relate to each other, rather than processing them one by one as previous systems did.

During training, these systems learn to predict which word comes next in a text, gradually developing a deep understanding of grammar, meaning, and context. It is a self-learning process where the model discovers linguistic patterns without anyone teaching him specific rules.

Difference Between LLM and Other AI Models

Have you ever wondered what makes LLMs special compared to other types of artificial intelligence? The main difference lies in their specialization and focus.

While AI is a broad term that includes any system that mimics human capabilities, LLMs concentrate exclusively on language. Other AI models may be designed to recognize images, predict market trends, or control robots, but LLMs are experts only at understanding and generating text.

Key differences include:

- Language specialization: LLMs master tasks such as translating, summarizing, or writing, while other AI models focus on completely different areas such as computer vision or data analysis.

- Specific architecture: They use optimized transformers to pick up relationships between words, something that other types of AI don’t necessarily require.

- Generative capabilities: Many LLMs can create original content, although generative AI encompasses more than text, including images, music, and code.

Why are they called ‘large’ language models?

The term “big” is not an advertising hype. He refers to three aspects that are really impressive for their magnitude:

Parameter size: LLMs contain trillions of parameters, which are like the “internal adjustments” that determine how they work. To give you an idea of the scale, GPT-3 has 175,000 million parameters, while Jurassic-1 has 178,000 million. It’s as if they have millions of small decisions programmed inside them.

Volume of training data: These models have processed massive amounts of text from the internet. We are talking about Common Crawl with more than 50,000 million web pages and Wikipedia complete with its 57 million articles. That’s more information than anyone could read in several lifetimes.

Computational capacity: Training an LLM requires supercomputers working for weeks or months. Each of those trillions of parameters must be fine-tuned, which demands extraordinary computing power.

The most prominent models you can currently use include:

- OpenAI’s GPT , known for its reasoning ability and coherent responses

- Claude who can analyze very long documents with up to 100,000 tokens

- Meta Flame , optimized for practical, real-world applications

- Google DeepMind’s Gemini , already integrated into Gmail and other services you probably use on a daily basis

Each has particular strengths, but they all share that “big” characteristic that makes them so powerful at working with human language.

Architecture of LLM models: how they are built

Have you ever wondered what’s behind ChatGPT’s ability to maintain a coherent conversation? In this section you will discover how these systems are built from the inside. We’ll show you in a simple way the main components that allow LLMs to understand and generate text as if they were human.

Remember that although the architecture may seem complex at first, understanding it will help you better choose which model to use according to your needs.

Deep neural networks and layers of attention

LLMs work through multi-layered neural networks that mimic, in some way, the functioning of the human brain. Think of these networks as a series of interconnected filters, where each layer processes and refines the information received from the previous layer.

The main difference with traditional models is the number of layers. While old systems used one or two layers, today’s LLMs employ hundreds or even thousands of layers to process information. Each node in these layers has a specific weight and threshold that determines whether it should be activated and pass information to the next layer.

Within this architecture, you’ll find three main types of layers:

- Embedding layers: Convert words into numbers that the model can understand

- Attention layers: Help the model identify which parts of the text are most important

- Forward Feed Layers: Process all this information to generate the final answer

Transformers and self-care

This is where the magic really happens. The Transformers, introduced in 2017, completely changed the game. This architecture allows the model to process all the words in a sentence at the same time, rather than word for word.

The self-care mechanism is at the heart of this technology. It works by evaluating the relationship between each word and all the other words in the text. For example, in the sentence “The dog in the park barks loudly,” the system understands “barks” to refer to “dog,” not “park.”

This ability to understand long-distance relationships was very difficult for previous models. In addition, by processing everything in parallel, training is much faster and more efficient.

Transformers use two main components: an encoder that understands the input text and a decoder that generates the response. However, some models such as GPT use only the decoder part.

Tokenization and embeddings

Before processing any text, LLMs need to convert it into numbers using tokenization. This process divides text into small units called tokens.

The most commonly used method is Byte Pair Encoding (BPE), which identifies the most frequent letter combinations and converts them into tokens. This allows the model to handle new words, proper nouns, and even typos intelligently.

Once tokenized, each word is converted into a numerical vector called embedding. These vectors capture the meaning of words in such a way that similar words have similar numbers.

Finally, since Transformers process everything simultaneously, they need to know the order of the words. To do this, they use positional encodings that are added to each embedding. Thus the model understands that “Mary loves John” is different from “John loves Mary.”

If you have any questions about these technical concepts, we recommend starting with the most practical aspects and then delving into the details according to your specific needs.

Training an LLM model: how much does it really cost?

Creating a large language model isn’t like installing a program on your computer. It is a process that consumes massive resources and determines whether the model will be useful or not. Here’s everything you need to know about this process.

How do LLMs learn? Two main methods

There are two main ways to train these models, each with its own advantages:

Autoregressive pretraining: Models like GPT work like a sentence-completing system. They learn to predict what the next word will be based on all the previous words. It’s like when you type on your mobile phone and it suggests the next word.

Masked pre-training: Models like BERT use a different method. Random words are hidden in a text and the model must guess which ones are missing. It’s like doing a fill-in-the-gaps exercise.

The key difference is that autoregressive models only look backwards to predict, while masked models can look both backwards and forwards, gaining a more complete understanding of the context.

The real costs: figures that will surprise you

Training an LLM is extraordinarily expensive. To give you a clear idea:

Massive energy consumption: GPT-3 needed 1,287 MWh of electricity for its training. This is equivalent to the annual consumption of more than 100 households.

Significant carbon footprint: The process generated more than 500 metric tons of CO2, comparable to 600 flights between New York and London or what 38 Spanish households produce in a full year.

High economic investment:

- A model with 1,500 million parameters cost 0.95 million euros in 2020

- GPT-3 (175 billion parameters) cost approximately €3.82 billion

- GPT-4 exceeded 95.42 million euros due to its greater complexity

Remember that these costs are divided into two phases: the initial training consumes 20-40% of the total energy but occurs only once. The continuous use phase can account for 50-60% of long-term energy expenditure.

Did you know that generating images consumes 2.907 kWh per 1,000 operations, while generating text only needs 0.047 kWh? Text generation is much more efficient.

How do they learn without human supervision?

Self-supervised learning is the key to successful LLMs. Unlike traditional methods that need manually labeled data, these models generate their own labels from raw text.

The process works like this: the model learns to predict missing parts of the text or the next word in a sequence. This allows you to train with huge amounts of text from the internet without the need for manual tagging.

The process is divided into two stages:

Step 1: Pretexting Tasks – The model learns representations of language through tasks such as predicting hidden words or completing sequences.

Step 2: Specific Tasks – The representations learned are applied to specific tasks such as translation or text classification.

There are two main techniques:

- Self-predictive learning: The model predicts parts of data based on other parts

- Contrastive Learning: The Model Learns to Distinguish Relationships Between Different Data Samples

This methodology makes it possible to efficiently take advantage of the massive computational resources necessary to create increasingly powerful LLMs.

Practical applications of LLMs in 2025

LLMs are no longer just laboratory experiments. By 2025, these tools solve real problems in companies in all sectors. Here are the most useful apps you can deploy today.

Automatic text generation and summaries

Do you need to process large volumes of information quickly? LLMs excel in their ability to create original content and summarize lengthy documents while maintaining key points.

Creating automatic summaries allows you to save significant time and resources when processing information. This functionality is especially valuable for:

- Research articles and technical documentation

- Legal and Financial Documents

- Customer Feedback Trend Analysis

- Business reports and market research

There are two main methods you can use. The extractive summary identifies and extracts the most relevant sentences from the original text, while the abstractive summary generates new sentences that capture the essence of the content. Depending on your needs, you can get anything from short summaries to detailed analyses.

More advanced tools also provide ranking scores to assess the relevance of extracted sentences and positional information to locate the most important elements.

Multilingual translation and code conversion

If you work with multiple languages or need to modernize old code, LLMs offer precise solutions that surpass traditional methods.

For translation, these models capture cultural nuances and preserve the tone of the original text. The DeepL model outperforms ChatGPT-4, Google, and Microsoft in quality, requiring significantly less revision: while Google needs twice as many corrections and ChatGPT-4 triple to reach the same quality.

In terms of code conversion, LLMs have proven to be extraordinarily effective in migrating legacy systems. The CodeScribe tool, for example, combines prompt engineering with human supervision to efficiently convert Fortran code to C++.

The results are impressive: while before the use of LLM you could convert 2-3 files per day, with these tools productivity increases to 10-12 files per day.

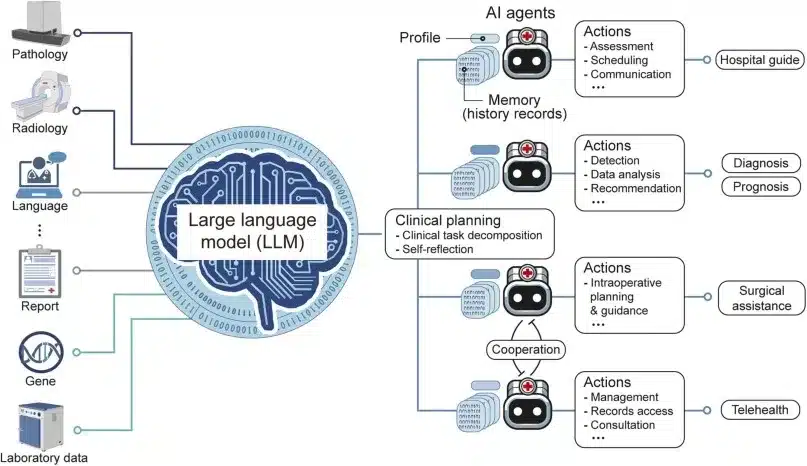

Virtual assistants and business chatbots

Do you want to automate customer service or improve internal processes? LLM-powered assistants maintain fluid conversations, understand complex questions, and adapt to each user’s style.

Remember that there is an important difference: AI assistants are reactive and perform tasks on demand, while AI agents are proactive and work autonomously to achieve specific goals.

A study among 167 companies identified customer service as the most popular use case for adopting LLM agents. In internal operations, these agents can save 30% to 90% of the time spent on routine tasks.

Automated support allows you to answer queries 24 hours a day, provide consistent information, and personalize the experience based on the customer’s history. In sales, you can use them to qualify prospects and gather valuable information without human intervention.

If you’re unsure which app is best suited to your business, we recommend starting with a pilot test in the area that currently consumes the most time.

How do you actually evaluate an LLM model?

If you’ve ever wondered how researchers determine whether an LLM is good or bad, here’s the answer. Evaluating these models isn’t as simple as it sounds, and knowing these methods will help you better understand what those scores you see in the comparisons really mean.

The perplexity metric: how “confused” is the model?

Perplexity is one of the most basic ways to measure how well an LLM works. Think of it as a model “confusion” meter: the lower the score, the less confused the model is in predicting the next word.

We explain it to you with a simple example. If you give a model the phrase “The cat is sleeping in the…” and you can easily predict that the next word is “couch,” you will have low perplexity. If you have no idea what comes next, the perplexity will be high.

The process works by calculating the inverse probability of the test text, normalized per word. When a model is very confident in its predictions, you get low numbers that indicate better performance.

Remember that perplexity, while useful, doesn’t tell you everything about the quality of the model. A model may be great at predicting words but terrible at creating coherent or creative texts.

Benchmarks: The “Driving Tests” of LLMs

In order to be able to compare models fairly, the scientific community has developed a series of standardized tests. They are like driving tests, but for artificial intelligence:

MMLU (Massive Multitask Language Understanding): This is the most comprehensive test, with about 16,000 multiple-choice questions covering 57 different subjects, from math to history. It is as if you had the model examined throughout the university career.

ARC (AI2 Reasoning Challenge): Contains more than 7,700 school-level science questions, divided into easy and difficult. Perfect for measuring whether the model can reason like a student.

TruthfulQA: This test is special because it measures whether the model is telling the truth or making up answers when they don’t know something. Very useful for detecting those “hallucinations” that we will talk about later.

There are also specialized tests such as HumanEval for programming, GSM8K for mathematics and Chatbot Arena where real users vote on which answers they prefer.

An interesting trend is “LLM-as-a-judge” evaluations, where an advanced model like GPT-4 acts as a judge to evaluate other models. The MT-Bench uses this methodology to test multi-turn conversations.

The problems with current evaluations

While these tests are useful, they have important limitations that you should be aware of. A recent study by Apple showed that the performance of LLMs “deteriorates when the complexity of the questions increases.” The researchers noted that small changes in the questions can completely alter the results, indicating that these models are “very flexible but also very fragile.”

Another serious problem is “data contamination.” As the models are trained with texts from the internet, there is a risk that they have “memorized” the answers of the tests, invalidating the results. It’s as if a student had seen the exam before doing it.

In addition, benchmarks are quickly “saturated”. When the most advanced models score 99%, they are no longer useful for measuring progress. That’s why they’ve developed more difficult tests like MMLU-Pro, which includes more complicated questions and more answer options.

The biggest limitation is that these assessments do not measure crucial aspects such as empathy, creativity, or pragmatic understanding of language, which are critical for real-world applications.

If you’re evaluating LLMs for your company or project, we recommend that you don’t rely solely on these scores. Hands-on tests with real-world use cases are often more revealing than academic benchmarks.

Risks and limitations of current LLMs

You’ve already seen the impressive capabilities of LLMs, but you also need to know their important limitations. These models, while powerful, present significant challenges that you should consider before deploying them to sensitive applications.

Hallucinations and generation of false information

Do you know what hallucinations are in language models? It is a phenomenon where these systems generate answers that sound convincing but contain completely false or invented information. This happens because LLMs operate through probabilistic word prediction, prioritizing that the text sounds coherent over the veracity of the facts.

An example that will help you understand the seriousness of the problem: a lawyer used ChatGPT to draft a legal brief with AI-generated references, only to later discover that the cited legal cases did not exist at all, resulting in penalties from the judge.

The data are revealing. Even the most advanced models hallucinate between 2.5% and 8.5% of the time in general tasks, a figure that can exceed 15% in some models. In specialized domains such as law, the rate of hallucinations can reach between 69% and 88% of responses.

Biases in training data

LLMs inherit and amplify the biases present in their training data. You can think of this as a student learning from books that contain historical biases – they will inevitably incorporate those biased perspectives.

Here’s a concrete example: when you ask ChatGPT for possible executive names, 60% of the names generated are male, while when requesting teacher names, most are female. This reveals how the models perpetuate gender stereotypes present in their training data.

In addition, a recent study found that the responses of the main LLM models tend to align with specific demographic profiles: mainly men, adults, highly educated, and with an interest in politics.

Privacy and security issues

When you interact with LLMs, you should consider the risks related to the privacy and security of your information. When entering data into these systems, there is a danger that this information could be stored, used for future training, or, in the worst scenario, exposed to third parties due to security vulnerabilities.

The most critical threats identified by OWASP include:

- Prompt Injection

- Unsafe handling of departures

- Training Data Poisoning

- Model denial of service

These vulnerabilities could allow attackers to manipulate the behavior of the LLM to extract sensitive information or execute malicious code.

A real case that illustrates these risks: Samsung employees shared confidential information with ChatGPT while using it for work tasks, exposing code and recordings of meetings that could potentially be made public.

Remember that knowing these risks does not mean that you should avoid LLMs, but that you should use them in an informed and responsible manner.

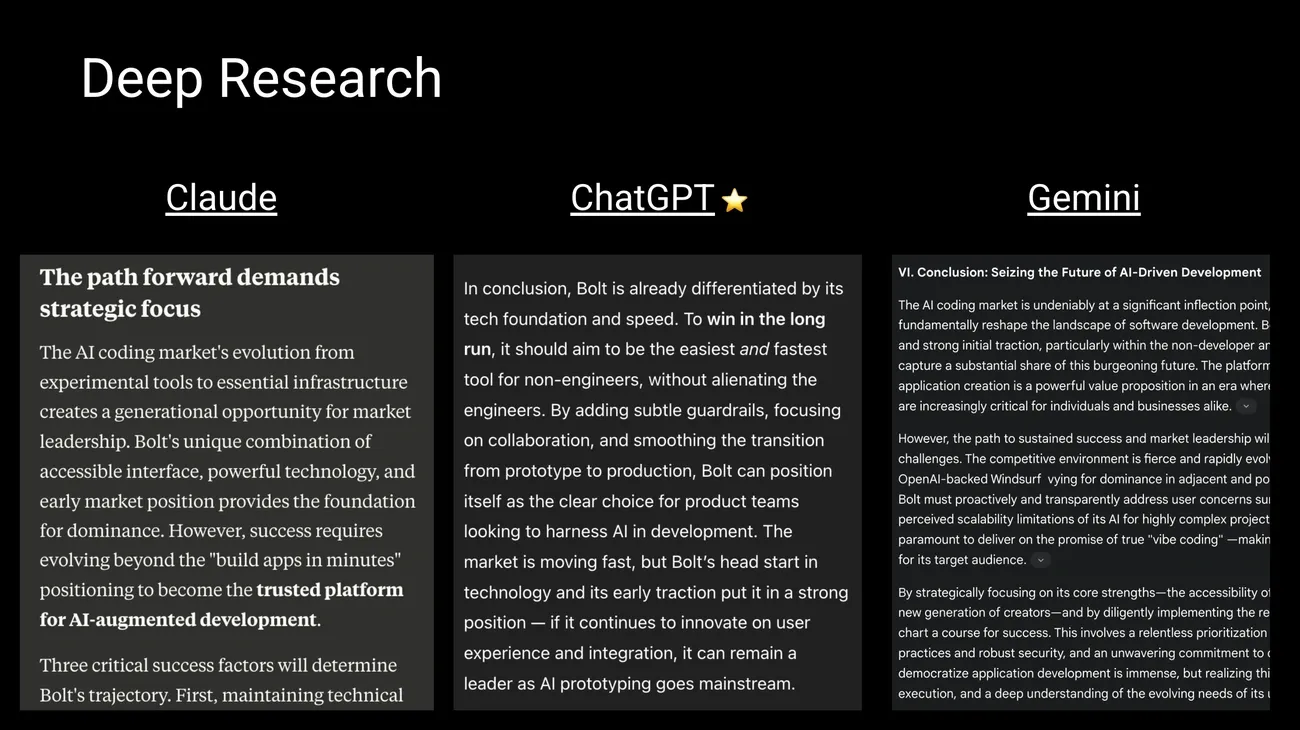

Most important LLM models in 2025: which one to choose?

Image Source: Behind the Craft by Peter Yang

Wondering what the best LLM model is for your projects? The landscape is constantly evolving, with new models improving the capabilities of their predecessors. Below, we show you the most outstanding options so that you can choose the most suitable one according to your needs.

GPT-4, Claude 3 and Gemini 1.5: the market leaders

Business models currently dominate the market thanks to their advanced capabilities. OpenAI’s GPT-4o stands out for its real-time multimodal capability, processing text, images, and audio with a minimum latency of approximately 300 ms. If you need a model for tasks that require quick responses and media processing, this is a great option.

Anthropic’s Claude 3 Opus excels at complex reasoning and problem-solving tasks, outperforming GPT-4 in benchmarks such as graduate-level expert reasoning (GPQA) and basic mathematics (GSM8K). We recommend Claude if you work with deep analysis or need to solve problems that require advanced logic.

Google’s Gemini 1.5 Pro offers impressive multimodal analytics capabilities and can process up to 1 million tokens, allowing you to analyze lengthy documents or even hours of video. It’s ideal if you need to process large volumes of information in one go.

Open Source Alternatives: LLaMA 3, Mistral, and DeepSeek

Prefer full control over your data? Open source models are advancing rapidly. Meta’s LLaMA allows you to locally install versions with different parameter sizes, keeping all your information on your own server.

Mistral stands out for its efficiency and offers versions optimized to run on both CPU and GPU. If you have limited resources but need good performance, Mistral may be your best option.

DeepSeek has gained popularity for its exceptional performance in reasoning and coding, competing directly with commercial models. The main advantage of these free alternatives is that all the information remains on your device, ensuring complete privacy of your data.

How to compare parameters and token capacity?

Capabilities vary significantly depending on the architecture of each model. GPT-4o offers a context of 128,000 tokens, while Gemini 1.5 Pro raises this limit to 1 million (with plans to expand it to 2 million). Claude 3 Opus handles up to 200,000 tokens, allowing you to analyze very large documents.

Remember that size also matters: although the exact figures are confidential, it is estimated that GPT-4 contains approximately 1.8 trillion parameters, while Gemini 1.5 Pro is around 1.5 trillion and Claude Opus approximately 200,000 million parameters.

Choosing the right model will depend on your specific needs: budget, volume of data to be processed, level of privacy required, and type of tasks you’ll perform.

Where are LLMs going? The trends that will shape the future

Have you ever wondered what LLMs will look like in the coming years? The evolution of these models is advancing at a rapid pace, and knowing the trends will help you prepare for the changes that are coming.

Models that understand everything: multimodality and extensive contexts

The most important trend you’ll see is multimodal integration. New models such as Gemini 1.5 and GPT-4o

In addition, the ability to handle large contexts has taken an extraordinary leap. Whereas models previously “forgot” information after a few pages, they can now process up to 1 million tokens on Gemini, with plans to reach 2 million soon. This allows you to analyze entire documents, entire books, or even hours of video without losing the thread of the conversation.

More efficient models: fewer resources, better performance

One trend that will directly benefit you is quantization. This technique reduces the numerical accuracy of the model’s weights, moving from 32-bit representations to more compact formats such as INT8 or INT4. What does this mean for you? Models up to 8 times smaller, lower power consumption and faster response speed.

Models like DeepSeek-V3 prove that you can get exceptional performance at significantly lower costs. If you plan to use LLM in your company, this trend will allow you to access advanced capabilities without the need for large investments in hardware.

Regulation: the new rules of the game

It recalls that the European Union has enacted the first comprehensive law on AI, with gradual implementation until 2026. This framework classifies systems according to their level of risk and imposes proportionate obligations, prohibiting practices deemed unacceptable. China, Canada, and other countries are also developing their own regulatory schemes.

What impact will this have on your daily use? Greater transparency in how the models work, better protection of your personal data and higher standards of quality and security.

The balance between innovation and responsible control will determine how quickly you can access these new capabilities and under what conditions.

Conclusion

What have we learned about LLMs along this journey? Primarily, that these models represent extraordinary technology that is already changing the way we work and communicate.

Remember that LLMs work by processing huge amounts of text to learn patterns in human language. Their transformer-based architecture allows them to understand context and generate consistent, though not always accurate, responses.

As for practical applications, you can use them to generate content, summarize documents, translate texts or create virtual assistants for your business. The productivity they offer is considerable: they can save between 30% and 90% of the time in routine tasks.

However, be aware of its important limitations. Hallucinations occur 2.5% to 15% of the time, biases are present in their responses, and you should be cautious about the sensitive information you share.

If you are going to use these models on a regular basis, we recommend that:

• Assess your specific needs before choosing between commercial options such as GPT-4o, Claude 3, or Gemini 1.5 • Consider open-source alternatives such as LLaMA or Mistral if you need more control over your data • Always verify the important information generated by these systems

The future points towards more efficient, multimodal models with longer contexts. Regulation is also coming, especially in Europe.

As a user of these technologies, your understanding of these concepts will allow you to better harness their potential while avoiding their main risks. The key is to use them as powerful tools, but always with human supervision and judgment.

Key Takeaways

LLMs represent a technological revolution that is transforming the way we interact with machines and process information. Here are the essential points you should know:

• LLMs are language-specialized AI models that use Transformer architectures to process trillions of parameters and generate human-like text.

• Its training requires massive resources: GPT-3 consumed 1,287 MWh of electricity and produced more than 500 tons of CO2, equivalent to 600 transatlantic flights.

• Practical applications include content generation, multilingual translation, code conversion, and virtual assistants that save 30-90% of the time on routine tasks.

• The main risks are hallucinations (2.5-15% of false answers), biases inherited from training data, and privacy and security vulnerabilities.

• The future points towards more efficient multimodal models, contexts of up to 2 million tokens, and responsible regulation such as the new European AI law.

Understanding these capabilities and limitations will allow you to harness the potential of LLMs as you responsibly navigate this ever-evolving technological landscape.

Remember that you can use the full power of the llm models in your AI agents with n8n and use their processing models to your workflows.

FAQs

Q1. What exactly is an LLM and how does it work? An LLM (Large Language Model) is an artificial intelligence model designed to understand and generate human language. It works by analyzing huge textual datasets and using deep neural networks to learn linguistic patterns and generate coherent text.

Q2. What are the most common practical applications of LLMs in 2025? The most common applications include automatic content generation, multilingual translation, code conversion between programming languages, and advanced virtual assistants for customer service and business productivity.

Q3. What are the main risks associated with using LLM? The most significant risks are hallucinations (generation of false information), the perpetuation of biases present in training data, and privacy and security issues related to the handling of sensitive data.

Q4. How is the performance of an LLM evaluated? Performance is evaluated using metrics such as perplexity, which measures the predictive capacity of the model, and standardized benchmarks that evaluate different capabilities such as comprehension, reasoning, and text generation. Adversarial evaluations are also used to test the robustness of the model.

Q5. What are the future trends in LLM development? Trends include the development of multimodal models that integrate text, image, and audio, the ability to handle longer contexts (up to millions of tokens), downsizing techniques to improve efficiency, and a focus on responsible governance and regulation of these technologies.